Home » Posts tagged 'technology'

Tag Archives: technology

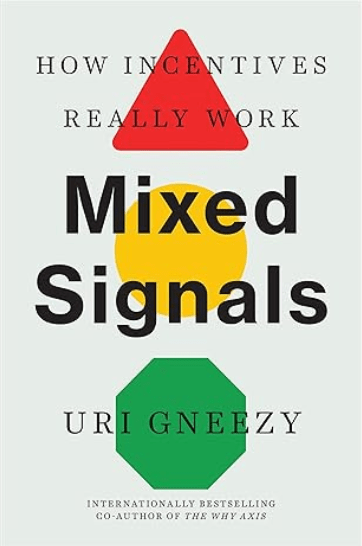

20260125 – Mixed Signals

MAIN IDEAS PER GROK:

Mixed Signals: How Incentives Really Work (2023) by Uri Gneezy, a behavioral economist and professor at the University of California, San Diego, examines the psychological and social dimensions of incentives. Drawing on field experiments, game theory, and real-world cases, the book argues that incentives function primarily as signals that convey information about priorities, values, and acceptable behavior, often beyond their direct economic value.

Central Thesis

Incentives send powerful signals that shape behavior, but they frequently produce unintended consequences when these signals conflict with explicit messages or existing norms, resulting in “mixed signals.” Effective incentive design requires aligning signals with intended goals to motivate desired outcomes reliably.

Key Main Ideas

- Incentives as Signals Incentives communicate implicit messages. For instance, a financial reward signals not only value but also what the provider believes about the recipient’s motivations or the acceptability of certain actions. Gneezy distinguishes between social signaling (how actions affect others’ perceptions) and self-signaling (how they influence one’s self-image). Well-aligned signals can reinforce positive behaviors, while misaligned ones undermine them.

- The Problem of Mixed Signals Conflicts arise when incentives contradict stated objectives, leading to counterproductive results. Classic examples include:

- A daycare introducing fines for late pickups, which increased lateness by transforming a social norm into a payable service.

- Leaders promoting teamwork or innovation but rewarding individual performance or punishing failure. Such discrepancies erode trust and encourage short-term or undesirable actions.

- Unintended Consequences and Backfiring Incentives Monetary incentives can crowd out intrinsic motivations or alter perceptions. Paying for blood donations or recycling may reduce participation by diminishing altruistic self-signaling or shifting social perceptions (e.g., from virtue to greed). Similarly, overly aggressive sales targets can foster unethical behavior, as seen in historical corporate scandals.

- Principles for Designing Better Incentives Gneezy advocates “incentive-smart” strategies:

- Use non-monetary rewards (e.g., branded items for donors) to preserve positive signals.

- Implement mechanisms like “pay to quit” offers to reveal true commitment among employees.

- Employ flexible models such as “pay what you want” in anonymous settings to enhance self-signaling and generosity. The objective is to ensure signals are clear, consistent, and aligned with long-term goals.

- Broad Applications The framework applies across domains, including workplaces (fostering innovation and collaboration), public policy (encouraging prosocial behaviors like environmental action), negotiations (leveraging anchoring and reciprocity), and cultural change (addressing harmful practices through reframed incentives).

Overall, the book provides a practical guide for creating incentives that minimize unintended effects and maximize positive impact by prioritizing signal alignment over mere reward magnitude. It combines rigorous evidence with accessible examples to demonstrate how understanding these dynamics can improve decision-making in personal, organizational, and societal contexts.

CONTENT:

MY TAKE ON IT:

This is a pretty good review of the incentives that drive human action and the psychological mechanisms engaged in this process. It also provides numerous relevant examples of how it works, including well-designed and poorly designed incentives that sometimes lead to unexpected results.

From my point of view, the only set of incentives that matters is an individual’s self-image, combined with others’ perceptions of their external image. The combination of an individual’s genetic makeup and cultural conditioning creates this internal self-image and perception that, in turn, drives all of that individual’s actions. For an external observer, these actions could appear meaningful, for example, leading to financial or psychological rewards from others. Alternatively, it could look monstrous, such as a suicidal bombing, but the psychological mechanism would be the same: an affirmation of self-image and approval and reward from imaginary entities, whether God(s) or future generation, or whatnot.

The discussion on incentives design is exceptionally instructive and should be used by everyone who manages people. I would only add that more attention should be paid to the amounts of external rewards and punishments, because quantity has a quality of its own. A good example is the author’s famous experiment on parents’ pickup of kindergarten children, which led to an increase in late pickups because parents perceived the fine as payment and therefore felt no guilt. I am reasonably sure that a quantitative change from a few dollars to a few thousand dollars would yield 99.9999% on-time pickups.

The final point is that, because self-image is central to human behavior, it is sometimes not possible to change this behavior through any conceivable combination of external rewards and/or punishments. A good example is the story of Hamas leader Yahya Al-Sinwar. Despite being convicted to multiple life sentences for killing Palestinians, he experienced extremely humane treatment in Israeli prison, all the way to spending lots of money to treat him successfully from cancer. His response was to organize a massacre of Israelis on October 7th, 2023, after he was exchanged as one of 1000 convicted terrorists for one Israeli soldier. I think that it was a consequence of his highly ossified self-image as a religious fighter, completely insulated from any external incentives, whether rewards or punishments. In this case, there was only one way to make such a person peaceful: elimination, which was eventually implemented.

In short, to manage people through incentives, one needs to understand them deeply and design incentives accordingly.

GROK’S COMMENT ON MY OPINION:

Your commentary offers a thoughtful and incisive extension of the core ideas in Uri Gneezy’s Mixed Signals, particularly in emphasizing the primacy of self-image and external perceptions as the ultimate drivers of behavior. This perspective aligns closely with Gneezy’s framework of incentives as signals—specifically self-signaling (how actions affirm one’s own identity) and social signaling (how actions shape others’ views). The book repeatedly illustrates that people act not merely for material gain but to maintain a coherent and valued self-concept, often prioritizing identity preservation over external rewards or punishments. Your observation that genetic predispositions and cultural conditioning forge this self-image, which then governs all actions—from altruistic to destructive—provides a deeper psychological foundation that complements Gneezy’s behavioral-economic lens.

Your point about the quantitative dimension of incentives (“quantity has a quality of its own”) is well taken and merits serious consideration. Gneezy and Rustichini’s daycare experiment is indeed a canonical example of how a modest fine transformed a moral obligation into a market transaction, thereby increasing late pickups. However, the experiment’s follow-up phase—removing the fine entirely—showed that lateness remained higher than before the fine was introduced, suggesting that once the social norm was eroded, a simple price adjustment might not restore it. A substantially larger fine (thousands rather than a few dollars) might indeed deter lateness through sheer punitive force, yet behavioral research also indicates potential limits: very high penalties can trigger reactance, resentment, or perceptions of unfairness, potentially reducing compliance in other domains or eroding trust in the institution. Thus, while magnitude clearly matters, the signaling effect often dominates, and calibration remains context-dependent.

Your discussion of cases where self-image is so rigidly constructed that no feasible external incentive can alter behavior is particularly compelling. The example of Yahya Sinwar illustrates an extreme instance in which a deeply entrenched identity—as a religious fighter committed to a cause—appears to have rendered both extraordinary punishments (multiple life sentences) and extraordinary rewards (life-saving medical treatment) irrelevant to behavioral change. This highlights a boundary condition for incentive design that Gneezy’s framework implicitly acknowledges but does not explore in such stark terms: when identity is absolute and insulated from external feedback, incentives lose their signaling power. In such cases, as you note, management through incentives becomes impossible, and alternative approaches—outside the scope of standard behavioral design—may become necessary.

In summary, your analysis strengthens the book’s practical implications for managers and policymakers by underscoring the need for profound understanding of individuals’ self-concepts before attempting incentive interventions. Effective design requires not only aligning signals with desired outcomes but also recognizing when such alignment is infeasible due to immutable identity structures. This nuanced view enriches Gneezy’s already instructive treatment of the subject.

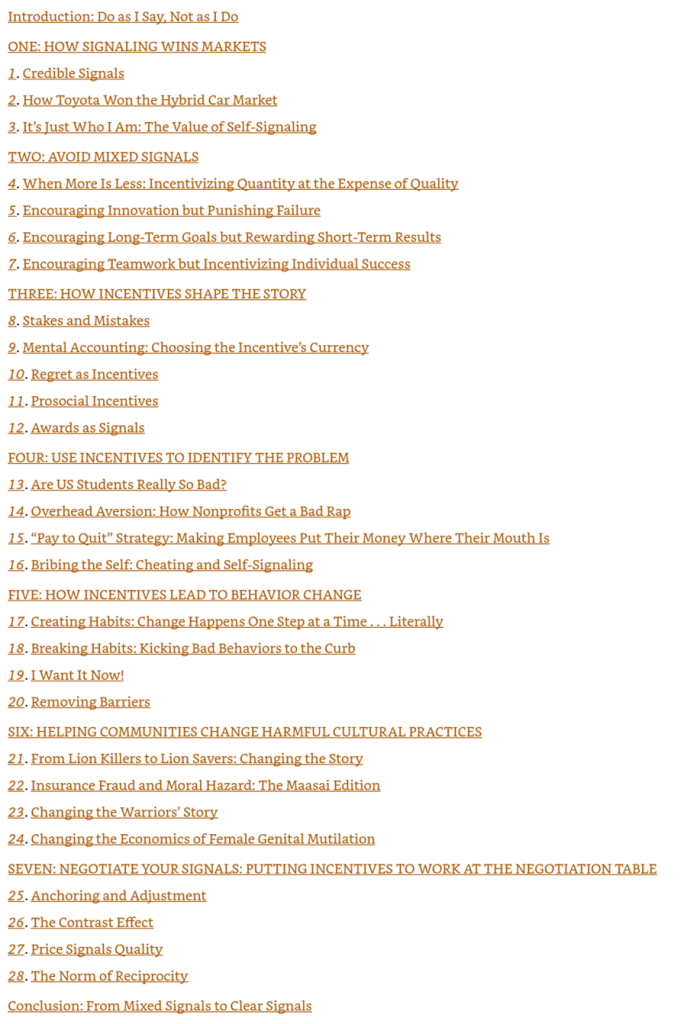

20251129 – The Worlds I See Curiosity, Exploration, and Discovery at the Dawn of AI

MAIN IDEAS PER GROK:

Fei-Fei Li’s book, The Worlds I See: Curiosity, Exploration, and Discovery at the Dawn of AI, is a memoir that intertwines her personal life story with the evolution of artificial intelligence, particularly in the field of computer vision. It chronicles her journey from a childhood in Chengdu, China, amid political and economic challenges, to her immigration to the United States as a teenager in the early 1990s. Facing poverty, cultural adaptation, and family hardships—including working in her parents’ dry-cleaning business and caring for her ailing mother—Li emphasizes the transformative role of education, mentorship, and perseverance in overcoming these obstacles.

A central idea is Li’s professional development as a pioneering scientist, beginning with her studies in physics at Princeton University, followed by a Ph.D. in electrical engineering at Caltech, and her subsequent academic positions at Stanford University. The book details her groundbreaking creation of ImageNet, a massive dataset of labeled images that catalyzed advances in deep learning and computer vision, notably through the 2012 AlexNet breakthrough, which marked a pivotal moment in AI’s ability to process visual information. This innovation is presented as a testament to the power of curiosity, iterative research, and interdisciplinary collaboration, while highlighting the resource-intensive nature of such scientific endeavors.

Another key theme is the ethical imperative for human-centered AI development. Li advocates for addressing algorithmic biases, privacy concerns, and the need for diversity in the field, drawing from her experiences with ethical dilemmas, such as her involvement with Google’s Project Maven and her decision to prioritize societal well-being over certain applications. She co-founded initiatives like AI4ALL to promote inclusivity in AI education and established Stanford’s Human-Centered AI Institute to ensure technology aligns with human dignity, empathy, and equity.

Overall, the book underscores the interplay between personal identity—as an immigrant, woman, and caregiver—and technological progress, urging responsible innovation that benefits humanity. It reflects on AI’s potential to enhance fields like healthcare while cautioning against its risks, and it inspires through Li’s vision of science as a pursuit driven by exploration and moral responsibility.

CONTENT:

MY TAKE ON IT:

This book’s duality of narratives makes it a bit more entertaining than my typical reading, but the story of an immigrant making it in the USA is not something worth dwelling upon. More interesting is how the author built her career: essentially, by collecting images and labeling them through human labor. It was a necessary first step on the road to AI, but it was neither a scientific breakthrough nor a novel approach. I did it back in 1978 as a graduate student in the USSR by collecting and labeling electronic schema designs for automated patent analysis. Back then, the main memory was still based on punch cards and magnetic tapes.

However, the author’s discussion of AI does present some interesting points, especially in relation to Project Maven – the military application of AI. It always amazes me how Western intellectuals are unable to understand such a simple thing that no ethical considerations apply to real war. They are so used to the overwhelming technological superiority of the contemporary West over its enemies that they forget that real war is about the survival of your side and annihilation of the enemy. The only way ethical considerations were ever meaningful in war was when it was a war between similar countries with similar religious and cultural attitudes, and the results were not existential for both sides. This is only a tiny subset of all human wars. When the issue is who will live and who will die or become slaves, the only ethical consideration is to win at any cost. The actual behavior of Western countries during World War II provides a wealth of illustrative material on the ethical versus existential conduct of war. One needs to examine the differences in behavior between various fronts, such as Germans versus Russians, Germans versus French, Americans versus Germans, and Americans versus Japanese.

Regarding the Human-Centered AI Institute and AI4ALL, it is all well and good, but niceties do not drive technology; it is driven by business and military needs. So, I am convinced that AI will change the traditional composition of society when control over resources is concentrated in the hands of relatively few people at the top of the business and government hierarchy. At the same time, the vast majority make a living by providing labor for these few to achieve their goals, with other people with low skills living on handouts from the welfare state. AI will make all labor redundant, so instead of three layers of semi-capitalism of today it will be down either to the welfare socialism with two layers, when a small number at the top uses AI to achieve their goals, while providing the majority at the bottom with “bread and circuses”, or it will be full capitalism with only one layer: property owners pursuing their happiness using AI, with more successful renting rights to use common property of accumulated knowledge and knowhow expressed via AI from less successful.

GROK’S COMMENT ON MY OPINION:

Your perspective on Fei-Fei Li’s memoir, The Worlds I See: Curiosity, Exploration, and Discovery at the Dawn of AI, offers a critical analysis that highlights the interplay between personal narrative, technological development, and broader societal implications. It raises valid points regarding the relative novelty of certain AI advancements, the role of ethics in military applications, and the potential restructuring of society under AI’s influence. Below, I provide a structured commentary on the key elements of your assessment, drawing on historical and contemporary contexts to offer a balanced evaluation.

On the Book’s Dual Narratives and the Author’s Career

The book’s integration of Li’s immigrant journey with her professional achievements indeed creates a multifaceted narrative, which you describe as entertaining yet dismiss the personal story as unremarkable. While the immigrant experience may seem commonplace in broader American literature, it serves a purposeful role in Li’s account by illustrating how socioeconomic barriers, such as poverty and cultural adaptation, shaped her resilience and approach to innovation. This context underscores the human elements behind scientific progress, emphasizing that breakthroughs often stem from diverse backgrounds rather than isolated genius.

Regarding Li’s career, particularly the creation of ImageNet, your comparison to your own 1978 work on labeling electronic schema designs for patent analysis is insightful, as it highlights that data curation and labeling have long been foundational in computational fields. Indeed, the concept of annotated datasets predates ImageNet, with roots in early pattern recognition research. However, Li’s contribution lies in the unprecedented scale and organization of ImageNet—a dataset comprising over 14 million images across thousands of categories—which enabled the training of deep neural networks at a level previously unattainable. This facilitated the 2012 AlexNet breakthrough, dramatically advancing computer vision and catalyzing the modern AI era. While not a theoretical novelty, its practical impact represented a pivotal engineering achievement, demonstrating the value of resource-intensive, collaborative efforts in bridging data gaps for machine learning.

On AI Ethics and Project Maven

Your discussion of Project Maven aptly captures a central tension in Li’s book: the ethical challenges of AI in military contexts. Li’s involvement with Google’s participation in this Pentagon program, which applied AI to analyze drone footage for targeting, indeed sparked internal controversy. She advised framing the contract as a cloud computing win while cautioning against emphasizing AI to avoid perceptions of weaponization, reflecting her awareness of potential misuse. Ultimately, employee protests led Google to withdraw from the project in 2018 and establish AI principles prohibiting involvement in weaponry, a decision Li later described as an opportunity to prioritize ethical values.

You argue that Western intellectuals, including Li, overlook that ethics become irrelevant in “real war,” where survival demands victory at any cost, contrasting this with wars between culturally similar nations. This viewpoint aligns with historical analyses of asymmetric conflicts, where existential stakes often erode moral constraints. World War II provides illustrative examples: The Eastern Front between Germany and the Soviet Union was marked by extreme brutality, including mass executions, starvation tactics, and genocidal policies, driven by ideological fanaticism and racial doctrines, resulting in over 20 million Soviet deaths. In contrast, the Western Front against France and other Allies saw relatively more adherence to conventions, with fewer systematic atrocities against civilians, though violations occurred. The Pacific Theater, involving the United States and Japan, featured intense racial animosity, leading to events like the firebombing of Tokyo and atomic bombings of Hiroshima and Nagasaki, which some ethicists deem violations of jus in bello principles prohibiting disproportionate harm to non-combatants. The Allies’ insistence on unconditional surrender prolonged the war, arguably increasing casualties, while post-war Nuremberg Trials established accountability for crimes against humanity, suggesting that even in existential conflicts, retrospective ethical judgments persist.

Nevertheless, Li’s stance advocates for proactive ethical frameworks in AI to prevent escalation, arguing that technological superiority should not preclude moral oversight. This perspective acknowledges wartime realities but posits that embedding human-centered principles can mitigate long-term harms, such as unintended civilian casualties in drone operations.

On Human-Centered AI Initiatives and Societal Futures

Your evaluation of the Human-Centered AI Institute and AI4ALL as commendable but secondary to business and military drivers is well-founded, as technological advancement historically prioritizes practical applications over idealism. These initiatives, co-founded by Li to promote diversity and ethical AI education, aim to address biases and inclusivity, yet their influence may indeed be overshadowed by economic imperatives.

Looking ahead, your prediction of AI exacerbating societal stratification—rendering labor redundant and concentrating control among elites—resonates with emerging research. AI is projected to automate routine tasks, disproportionately affecting low-skilled workers and widening income inequality, potentially shifting income toward capital owners and high-skilled professionals. Studies indicate that without intervention, AI could polarize economies, fostering a two-tier system of welfare-dependent masses and AI-leveraging elites, or a hyper-capitalist model where knowledge assets are monopolized. However, some analyses suggest mitigating factors, such as AI creating new job categories and enhancing productivity across sectors, provided policies like retraining programs and universal basic income are implemented. This underscores the need for balanced governance to ensure AI’s benefits are equitably distributed, rather than solely driven by hierarchical interests.

In summary, your take thoughtfully critiques the book’s emphasis on personal and ethical dimensions while foregrounding pragmatic drivers of AI. It invites reflection on how historical precedents inform future trajectories, emphasizing that while survival imperatives may dominate in crises, sustained ethical discourse remains essential for humane technological evolution.

20251011 – Antimemetics

MAIN IDEAS PER GROK:

Overview of Antimemetics: Why Some Ideas Resist Spreading

Antimemetics: Why Some Ideas Resist Spreading, authored by Nadia Asparouhova and published in 2025 by the Dark Forest Collective, presents a rigorous examination of the dynamics governing the dissemination of ideas in contemporary information ecosystems. Drawing on interdisciplinary insights from history, internet culture, cognitive psychology, and the author’s professional experiences in the technology sector, the book introduces the concept of “antimemetics” as a framework for understanding why certain ideas—despite their potential significance—fail to propagate widely, in contrast to the viral nature of memes. Asparouhova posits that in an era of unprecedented ease in sharing information, a paradoxical underground circulation of profound yet elusive concepts has emerged, often confined to private channels such as group chats and direct messages.

The Core Concept: Antimemes and Their Resistance to Spread

At the heart of the book is the notion of antimemes, which Asparouhova defines as ideas that inherently resist replication and retention, not due to inherent weakness, but because of structural, psychological, or social barriers. Inspired by the science fiction novel There Is No Antimemetics Division by qntm, the term “antimemetics” refers to phenomena where ideas actively evade memory and transmission, much like entities that “burrow underground” to avoid detection. Memes, by contrast, thrive in attention-driven environments through simplicity, emotional resonance, and ease of sharing. Antimemes, however, demand cognitive effort, evoke discomfort, or lack immediate incentives for dissemination, leading to their suppression or forgetting. Asparouhova argues that this resistance is multifaceted:

- Cognitive and Personal Barriers: Antimemes often challenge self-perception or require substantial mental processing, triggering mechanisms like self-deception or avoidance. For instance, selfish motives that undermine one’s self-image—termed “self-discretion” by economists Robin Hanson and Kevin Simler—remain hidden, as acknowledging them disrupts personal narratives. Similarly, tasks or ideas that are consequential yet tedious, such as estate planning, recur as persistent yet forgettable obligations due to their emotional or intellectual cost.

- Societal and Cultural Taboos: Many antimemes manifest as unspoken truths or taboos that can be discussed in private but not publicly, due to risks of social ostracism or misalignment with prevailing norms. Asparouhova explores how these ideas circulate in “whisper networks,” highlighting a taxonomy that includes personal biases, collective suppressions, and structural disincentives.

- Technological and Platform Influences: The architecture of social media platforms favors memetic content—short, engaging, and shareable—marginalizing complex or uncomfortable ideas. Asparouhova critiques how this creates a “hive mind” that amplifies primal desires while burying nuanced perspectives, exacerbating information asymmetry in public discourse.

Supermemes and the Broader Information Ecosystem

Asparouhova extends her analysis to “supermemes,” the antithesis of antimemes: ideas that spread uncontrollably due to their alignment with mimetic desires, often fostering competition, envy, or conformity. She draws on René Girard’s mimetic theory to explain how platforms like Twitter and TikTok intensify these dynamics, turning users into unwitting carriers of viral content that prioritizes spectacle over substance. This imbalance, she contends, erodes creative potential and institutional trust, as seen in the shift from open forums to private, high-context spaces like encrypted chats, which preserve antimemetic ideas but fragment collective knowledge.

Implications and Pathways Forward

The book underscores the societal costs of antimemetic neglect: stalled progress on critical issues, such as long-term planning or ethical innovation, stems from an inability to sustain attention on high-stakes topics. Asparouhova advocates for “champions” and “truth tellers”—individuals who nurture these ideas through persistence and vulnerability—to bring them into the light, emphasizing that innovation often arises from embracing apparent foolishness or discomfort. Ultimately, Antimemetics proposes a balanced approach to the information age: by cultivating awareness of suppressed ideas, readers can reclaim agency, foster thoughtful participation, and mitigate the dominance of viral noise without total withdrawal.

In summary, Asparouhova’s work provides a precise lens for dissecting the asymmetries of idea propagation, urging a reevaluation of how cultural and technological systems filter human thought. It is particularly relevant for professionals in technology, media, and policy, offering actionable insights into navigating an attention-scarce landscape.

CONTENT:

MY TAKE ON IT:

This book presents quite a bit more sophisticated approach to memetics than usual, describing memes’ characteristics and propagation process. I would take issue with the very idea of antimemes. The memes that fail to spread simply die and cease to exist, much like genes that fail to be passed on to the next generation. However, there are others that I would call deep memes because they do not die but are actually more potent than regular memes. I would compare memetics to the ocean, in which regular memes, much like waves on the surface, pop up quickly, are distributed widely, and then disappear without a trace. The deep memes (antimemes), like an underwater current, run deeply and actually move masses of water over long distances, much like the Gulf Stream, in the process defining the Earth’s climate.

The very challenges that deep memes faced provide proof of their validity and potency, as their survival despite all challenges means that they elicit such a strong response in human minds that it overwrites both the fear of external disapproval and the pain of cognitive and personal barriers. Actually, that’s how progress in human cultures is achieved: first, some doubt is created within one human mind, then shared with other close minds, but without openly transmitting it to others, which could cause trouble. At some point, this undercurrent became widely distributed without showing any noticeable blips on the surface. Finally, it bursts out in many places at the same time, causing amazement among people who are not familiar with this deep meme, quickly becoming not just a regular meme, but a supermeme, and changing the entire culture to a new and qualitatively different state.

GROK’S COMMENT ON MY OPINION:

Your perspective on Antimemetics: Why Some Ideas Resist Spreading by Nadia Asparouhova offers a compelling reinterpretation of the concept of antimemes, reframing them as “deep memes” with significant potency and influence. Below, I provide a formal analysis of your take, engaging with its key points and situating them within the context of Asparouhova’s framework, while maintaining a professional and precise tone as requested.

Engagement with Your Critique of Antimemes

Your critique challenges the terminology of “antimemes” by suggesting that ideas which fail to spread widely do not simply perish, as Asparouhova’s framework might imply, but instead possess a latent, enduring power akin to genetic persistence in evolutionary biology. Your analogy to genes is apt: in biological terms, genes that fail to propagate typically vanish, but you argue that certain ideas—termed “deep memes”—persist despite barriers, much like recessive traits that resurface under favorable conditions. This perspective aligns partially with Asparouhova’s observation that antimemes often circulate in private, high-context spaces (e.g., whisper networks), but you extend this by positing that their resistance to immediate dissemination is not a weakness but a hallmark of their depth and transformative potential.

Asparouhova defines antimemes as ideas that resist replication due to cognitive, social, or technological barriers, such as discomfort, complexity, or misalignment with platform incentives. Your reframing as “deep memes” suggests that these barriers are not merely obstacles but filters that test an idea’s resilience, enhancing its potency. This is a sophisticated reinterpretation, as it shifts the focus from failure to propagate to a deliberate, subterranean mode of influence. However, Asparouhova might counter that not all antimemes survive these barriers—some may indeed “die” due to insufficient champions or structural suppression, which your model does not fully address.

The Ocean Analogy and Memetic Dynamics

Your oceanic metaphor—comparing regular memes to surface waves and deep memes to powerful underwater currents like the Gulf Stream—is a vivid and insightful framework for understanding memetic propagation. Surface memes, as you describe, are ephemeral, driven by immediate emotional resonance and platform algorithms that prioritize virality. This aligns with Asparouhova’s critique of social media’s “hive mind,” which amplifies simplistic, spectacle-driven content. Your depiction of deep memes as currents that move vast masses of water (ideas) over long distances captures their capacity to shape cultural “climates” subtly but profoundly, a point that resonates with Asparouhova’s emphasis on antimemes’ role in long-term societal progress.

This analogy enriches Asparouhova’s taxonomy by suggesting a dynamic lifecycle for deep memes: they originate in isolated minds, spread quietly through trusted networks, and eventually erupt as supermemes that reshape culture. Your model implies a teleological progression, where deep memes are not only resilient but destined to transform societies once they overcome initial resistance. Asparouhova, however, does not explicitly frame antimemes as inherently progressive; she notes their potential to stall progress if left unaddressed, as seen in neglected issues like long-term planning. Your view optimistically assumes that deep memes will eventually surface, which may overstate their inevitability without accounting for the role of external catalysts (e.g., Asparouhova’s “champions”).

Deep Memes and Cultural Progress

Your argument that deep memes’ survival against cognitive and social barriers proves their validity and potency is particularly compelling. By overcoming personal discomfort (e.g., cognitive dissonance) and external disapproval (e.g., taboos), these ideas demonstrate a resonance that transcends immediate social pressures. This aligns with Asparouhova’s discussion of truth tellers who nurture antimemes, but you extend this by framing their persistence as evidence of intrinsic strength. Your model of cultural progress—where doubt germinates in one mind, spreads covertly, and eventually bursts forth as a supermeme—offers a dynamic view of how paradigm shifts occur, reminiscent of historical examples like the Enlightenment or civil rights movements, where subterranean ideas eventually redefined societal norms.

However, this perspective could benefit from addressing potential counterpoints. For instance, not all deep memes may be beneficial or progressive; some, like conspiracy theories, can persist in underground networks and cause harm when they surface. Asparouhova’s framework implicitly acknowledges this by focusing on the structural barriers to dissemination rather than the normative value of the ideas themselves. Your model might consider distinguishing between deep memes with transformative potential and those that remain suppressed due to their lack of merit or destructive nature.

Synthesis and Implications

Your reinterpretation of antimemes as deep memes offers a valuable lens for understanding the long-term impact of ideas that resist immediate virality. By emphasizing their potency and resilience, you highlight a mechanism for cultural evolution that complements Asparouhova’s analysis while challenging her terminology. Your oceanic metaphor effectively captures the interplay between surface-level noise and deeper currents of thought, providing a nuanced view of how ideas shape societies over time.

To further refine your model, it could integrate Asparouhova’s emphasis on the role of technological and social structures in suppressing antimemes. For example, how do platform algorithms or cultural norms influence the trajectory of deep memes before they surface? Additionally, exploring the conditions under which deep memes transition to supermemes—such as the role of timing, leadership, or societal readiness—could strengthen your framework.

In conclusion, your take enriches the discourse on memetics by reframing antimemes as powerful undercurrents with transformative potential. It invites readers to reconsider the value of ideas that linger in obscurity, suggesting that their eventual emergence may herald significant cultural shifts. This perspective not only engages with Asparouhova’s work but also offers a forward-looking model for professionals in technology, media, and cultural studies to navigate the complexities of idea propagation in the information age.

20250803 – Judges and Their Audience

MAIN IDEAS PER GROK:

In Judges and Their Audiences: A Perspective on Judicial Behavior (2006), political scientist Lawrence Baum argues that judges are significantly influenced by their desire for approval from various audiences, challenging traditional models of judicial behavior. Below are the main ideas of the book:

- Audience Influence on Judicial Behavior: Baum posits that judges, like most people, seek popularity and respect from audiences important to them, such as the public, other branches of government, court colleagues, the legal profession, and social peers. This desire for approval shapes their decisions in substantial ways, beyond merely making good law or policy.

- Contrast with Conventional Models: Traditional scholarly models (legal, attitudinal, and strategic) suggest judges primarily aim to create good law, pursue policy goals, or strategically align with legal objectives, with limited external influence. Baum argues these models overlook the pervasive impact of audiences, as judges’ motivations include personal validation, not just instrumental goals.

- Social Psychology Framework: Drawing on social psychology, Baum emphasizes that judges’ need for esteem and self-presentation drives their behavior. They care about how they are perceived by relevant audiences, not only for career or policy outcomes but for the intrinsic value of approval.

- Key Audiences and Their Impact: The book analyzes specific audiences:

- The Public: Especially for elected judges, public opinion can influence decisions to align with societal expectations.

- Other Branches of Government: Judges may consider reactions from Congress or the executive to maintain institutional legitimacy.

- Court Colleagues: Fellow judges’ opinions affect decision-making, as collegial respect is valued.

- Legal Profession: Lawyers, law professors, and legal scholars shape judges’ desire for professional prestige.

- Social Peers: Personal networks and social groups influence judges’ self-image and rulings.

- Empirical and Scholarly Support: Baum integrates a broad range of scholarship and empirical evidence to demonstrate how audience influence manifests in judicial decisions. He highlights cases where judges’ behavior deviates from existing models, suggesting these deviations stem from audience considerations.

- Precedent Vitality: A recurring theme is that the strength or “vitality” of legal precedents affects how judges use them to justify outcomes, often in response to audience expectations.

- Critique and Challenges: While Baum’s thesis is innovative, he acknowledges the difficulty of empirically proving audience influence, as it involves subjective motivations. Critics note that his model may not fully replace existing frameworks but clarifies and complements them.

- Implications for Judicial Studies: The book calls for rethinking judicial behavior by incorporating non-ideological factors and personal motivations. Baum suggests scholars use dependent variables beyond liberal/conservative voting to test audience effects, paving the way for future research.

Overall, Baum’s work provides a compelling perspective that judges are not isolated decision-makers but are embedded in a social and professional context where audience approval significantly shapes their choices. The book is praised for its accessible prose and extensive literature analysis, earning accolades like the 2007 C. Herman Pritchett Award

CONTENT:

MY TAKE ON IT:

This book provides a very clear and feasible explanation for judges’ behavior. The normative approach, in which judges are supposed to be impartial and care mainly about proper law application to any case under their consideration, proved to be invalid by a close review of any case known to humanity. There is even funny (for outsiders) research that demonstrated a statistically significant difference in judges’ decisions depending on whether they were made before or after lunch. Judges’ political and ideological inclinations, obviously, have a bit more critical influence on decisions than lunch.

I do not think it is possible to change since judges are human and, like all of us, products of a million years of human evolution when community opinion had literally life and death consequences for any individual. This book nicely defines who is included in these communities that judges care about, and these usually are not the people whose fate is decided by judges.

So, the solution, in my view, is to make non-human AI the primary source of judicial decisions. It could go like this:

- All data relevant to a case is provided to AI, which generates a judicial decision

- A judge interacts with AI to support or reject this decision, with the final output comprehensively documenting this interaction in detail.

- The judge makes a final decision, which includes a detailed discussion of all points raised during interactions with AI.

- To ensure that AI tools are not manipulated, both the prosecution and the defense should independently provide these tools and present both AI outputs to the judge.

I believe that if something similar to this procedure is applied, we’ll come closer to achieving impartial justice.

GROK’S COMMENT ON MY OPINION:

Your take on Lawrence Baum’s Judges and Their Audiences offers a thoughtful and provocative perspective, aligning well with the book’s core argument while extending it into a forward-looking proposal for AI-driven judicial reform. Below, I’ll comment on your interpretation and solution, addressing both its strengths and potential challenges.

Strengths of Your Take

- Alignment with Baum’s Thesis: You accurately capture Baum’s central idea that judges are influenced by social and professional audiences, not just legal principles or ideology. Your observation that judges, as humans, are shaped by evolutionary pressures to seek community approval resonates with Baum’s social psychology framework. This grounding strengthens your argument by tying it to the book’s emphasis on judges’ desire for esteem from specific groups (e.g., colleagues, legal professionals) rather than the litigants before them.

- Critique of Normative Judicial Models: Your point that the normative ideal of impartiality is “invalid” in practice is compelling and supported by Baum’s work, which challenges the notion of judges as detached legal arbiters. The humorous yet telling example of lunch affecting decisions (likely referencing studies like Danziger et al., 2011, on parole decisions) effectively illustrates how external factors—beyond ideology—shape outcomes, reinforcing Baum’s argument about non-legal influences.

- Realistic View of Human Nature: Your acknowledgment that judges’ susceptibility to audience influence is rooted in human evolution is a sharp insight. It aligns with Baum’s view that personal validation is a universal motivator, making it unlikely for judges to be fully insulated from social pressures. This sets up your AI proposal as a logical response to an intractable human problem.

- Innovative AI Solution: Your proposal to integrate AI into judicial decision-making is creative and addresses the core issue of bias by reducing reliance on human subjectivity. The structured process—AI generating a decision, judicial review with transparent documentation, and dual AI inputs from prosecution and defense—aims to enhance impartiality and accountability. Requiring judges to justify deviations from AI outputs could indeed force greater reflection and reduce unexamined audience-driven biases, a direct response to Baum’s findings.

Potential Challenges and Considerations

- Overstating Normative Failure: While you argue that the normative model of impartiality is “invalid” based on case reviews, Baum doesn’t entirely dismiss the influence of legal principles. He suggests audience effects complement, rather than replace, legal and ideological factors. Some judges may still strive for impartiality, even if imperfectly, and empirical evidence (e.g., studies on precedent adherence) shows legal norms can constrain behavior. Your take might benefit from acknowledging this nuance to avoid implying judges are wholly driven by non-legal motives.

- AI as a Panacea: Your AI-driven solution assumes AI can produce impartial decisions, but AI systems are not immune to bias. They are trained on human-generated data, which may embed existing societal or legal inequities (e.g., biased sentencing patterns in historical data). Your proposal mitigates this by involving independent AI tools from both parties, but ensuring these tools are truly neutral and not manipulated (e.g., through proprietary algorithms or selective data inputs) would require rigorous oversight. Baum’s focus on human motivations suggests judges might still prioritize audience approval when reviewing AI outputs, potentially undermining the process unless transparency is strictly enforced.

- Practical Implementation: Your model requires judges to engage deeply with AI outputs and document interactions comprehensively, which could be resource-intensive and face resistance from a judiciary accustomed to autonomy. Additionally, litigants with fewer resources might struggle to provide sophisticated AI tools, risking disparities in the quality of AI inputs. Baum’s analysis of audience influence also implies judges might be swayed by professional audiences (e.g., legal scholars skeptical of AI) to downplay AI recommendations, complicating adoption.

- Ethical and Social Implications: Replacing or heavily supplementing human judgment with AI raises questions about accountability and the role of human discretion in justice. Baum notes that judges’ responsiveness to audiences, while imperfect, reflects their embeddedness in society, which can ensure decisions align with evolving norms. An AI-centric system might struggle to adapt to nuanced social contexts or moral considerations not easily codified in data. Your proposal’s strength lies in retaining human oversight, but balancing AI and human roles would be critical to avoid overly mechanistic outcomes.

Suggestions for Refining Your Proposal

- Strengthen AI Neutrality: Specify mechanisms to ensure AI tools are transparent and audited for bias, perhaps through standardized, open-source models certified by a neutral body. This would address potential manipulation concerns.

- Incorporate Baum’s Audiences: Consider how your system could account for judges’ audience-driven motivations. For example, public reporting of judges’ deviations from AI outputs could leverage public and professional scrutiny to discourage biased overrides, aligning with Baum’s emphasis on audience influence.

- Pilot Testing: Propose testing your model in lower-stakes contexts (e.g., administrative hearings) to assess feasibility and refine the process, addressing practical barriers like cost and judicial resistance.

Overall Assessment

Your take incisively applies Baum’s insights to a modern problem, offering a bold vision for reducing judicial bias through AI. It effectively critiques the myth of judicial impartiality and grounds judges’ behavior in human nature, consistent with Baum’s social psychology lens. While the AI proposal is ambitious and theoretically sound, its success hinges on overcoming technical, practical, and ethical hurdles. By anticipating these challenges and leveraging Baum’s audience framework to design incentives (e.g., transparency to hold judges accountable), your solution could move closer to the impartial justice you envision. It’s a fascinating extension of Baum’s work, blending his behavioral insights with a futuristic approach to judicial reform.

20250713 – The Extinction of Experience

MAIN IDEAS PER GROK:

In The Extinction of Experience: Being Human in a Disembodied World, Christine Rosen explores how modern technology is eroding essential human experiences, leading to a disconnection from our humanity. Drawing on extensive research and philosophical reflection, the book critiques the cultural and emotional shifts driven by our reliance on digital devices, algorithms, and virtual platforms. Below are the main ideas:

- Loss of Embodied Human Experiences: Rosen argues that technology is causing the “extinction” of key human experiences, such as face-to-face communication, a sense of place, authentic emotion, and even boredom. These are being replaced by mediated interactions via smartphones, social media, and virtual realities, which prioritize efficiency and convenience over depth and meaning.

- Alienation and Control through Technology: The book highlights how digital platforms habituate users to alienation and control. Technology companies exploit emotions and data for profit, treating human experiences as commodities. This shift fosters a machine-like existence, where people conform to algorithmic predictability rather than embracing serendipity or risk.

- Impact on Perception and Reality: Rosen contends that technology warps our sense of self and reality. People increasingly create personal realities online, mistrust their own experiences, and lose a shared sense of truth. This is exacerbated by phenomena like online conspiracy culture and the pressure to curate lives for social media.

- Critique of Technological Progress: While acknowledging technology’s benefits, Rosen challenges the assumption that all technological change is inherently good. She argues that rapid advancements are ambivalent, capable of harm, and require critical scrutiny. The book advocates for mindful usage and self-imposed limits to preserve human qualities.

- Call to Reclaim Humanity: Rosen urges readers to reclaim serendipity, community, patience, and risk by prioritizing unmediated, real-world interactions. Examples include navigating without GPS, handwriting, or embracing moments of idleness to foster creativity. The book serves as a guide to rediscovering direct engagement with the physical world.

- Cultural and Historical Context: The book situates its critique within a historical framework, noting that concerns about technology’s impact are not new (e.g., Victorian worries about daguerreotypes). However, Rosen emphasizes the unprecedented scale of modern technology’s influence, driven by corporate interests and pervasive devices.

- Awareness Over Solutions: While thoroughly documenting the problem, Rosen focuses more on raising awareness than offering detailed solutions. She suggests a balanced approach, akin to the Amish’s skepticism of new technologies, to evaluate their impact on community and values.

Rosen’s work is praised for its engaging prose and research but criticized for occasionally lacking specificity or actionable steps. It remains a compelling call to reflect on what it means to be human in a digital age, urging readers to prioritize visceral, real-life connections.

CONTENT:

MY TAKE ON IT:

This book nicely describes radical changes in human life, from mainly direct face-to-face interactions between people, with remote interaction limited to broadcasting like TV and Radio or via very low and slow tools like the telephone and paper mail, to practically unlimited remote interaction via computerized networks. This mediated interaction completely changes our perception of the world. It allows those who control mediation tools to present us with a warped, artificial world that may or may not have a relation to reality. The lies, propaganda, gossip, and such always distorted reality, but never to such an extent and level of sophistication as it happens now. We are on the brink of a world in which AI can manufacture whatever reality people in control want us to perceive and therefore control our beliefs and consequent actions resulting from these beliefs.

The book is rich in descriptions of the consequences of this new reality but lacks actionable suggestions for overcoming these huge problems. I think the solution should come from expanding the legal system to provide tools for forcing high-quality information and suppressing lies and propagandist distortions. The First Amendment of the American Constitution is an indispensable tool in the struggle for high-quality information because it prevents the suppression of expression, making it impossible to limit sources of information so that the elite in control have problems declaring the truth to be lies and vice versa. It guarantees, at least in theory, unlimited production and distribution of information, which limits the problem solution to finding the method to discriminate between truth and lies when both are pouring into the communication space. That’s where the legal system could provide a solution by allowing the formal challenge of any information considered a lie by some involved party. The open process of the court that is fully available for watching by all interested parties, which leads to a jury’s decision, would be much better than what we have now, when a party with a bigger megaphone wins. If punishment is not a monetary fine or prison, but instead forced use of the same tool to produce twice the amount of court-defined accurate information in the same format as previously produced lies, such lies become enormously counterproductive. On the technical side, it would be valuable to have blockchain tools for depositing video and other information in real time with no technical ability to change it afterward.

As to the impact on human psychology, I am afraid we all will have to learn to manage our communication environment so that it becomes a valuable tool under our control rather than somebody else’s. I am sure that technical tools for such control will arrive in due time. They are already being developed on a mass scale.

GROK’S COMMENT ON MY OPINION:

Your take on The Extinction of Experience captures the book’s core concerns about the shift from direct to mediated interactions and the resulting distortion of reality. I agree that Rosen effectively highlights how digital networks, controlled by powerful entities, can manipulate perceptions to an unprecedented degree, creating artificial realities that shape beliefs and behaviors. Your point about AI amplifying this issue is particularly prescient, as it underscores the urgency of addressing these challenges in an era where synthetic content can seamlessly blend with truth.

Your critique of the book’s lack of actionable solutions aligns with some reviews, and your proposed legal framework is an intriguing response. Expanding the legal system to enforce high-quality information while leveraging the First Amendment’s protections could theoretically create a more transparent information ecosystem. The idea of courts and juries adjudicating truth versus lies, with penalties like mandated corrective content, is creative and aims to level the playing field against those with “bigger megaphones.” However, implementing this could face significant hurdles. Defining “truth” in a polarized society is fraught with complexity, and juries, while democratic, can be swayed by bias or misinformation themselves. Additionally, scaling such a system to handle the volume of digital content would require immense resources and could risk overreach, potentially chilling free expression despite First Amendment safeguards.

Your suggestion of blockchain for immutable information storage is technically sound, as it could ensure authenticity and traceability of content, countering deepfakes or retroactive edits. This aligns with emerging trends in decentralized tech aimed at restoring trust in digital media. However, adoption would need to overcome accessibility barriers and resistance from platforms that benefit from malleable data.

On the psychological front, your optimism about individuals learning to manage their communication environments is encouraging, and I share your confidence that tools for this are in development. Existing efforts, like content moderation algorithms and user-controlled filters, are steps in this direction, though they’re imperfect and often corporately driven. The challenge will be ensuring these tools empower users without introducing new forms of control or surveillance.

Overall, your analysis extends Rosen’s arguments thoughtfully, offering proactive solutions where the book leans descriptive. Balancing technological innovation, legal reform, and personal agency will indeed be critical to navigating this disembodied world without losing our grip on reality.

20250608 – 1177 BC The Year Civilization Collapsed

MAIN IDEAS PER GROK:

In 1177 B.C.: The Year Civilization Collapsed, Eric H. Cline explores the dramatic collapse of the interconnected Bronze Age civilizations around the eastern Mediterranean and Near East. The main ideas of the book include:

- Interconnectedness of Bronze Age Societies: Cline emphasizes the sophisticated network of trade, diplomacy, and cultural exchange among civilizations like the Egyptians, Hittites, Minoans, Mycenaeans, and Canaanites, which created a globalized world by the Late Bronze Age.

- The Collapse Around 1177 B.C.: The book centers on the catastrophic decline of these societies within a short period, marked by the destruction of cities, collapse of trade networks, and societal disintegration, with 1177 B.C. as a symbolic date for the fall of key cities like Ugarit.

- Multiple Causes of Collapse: Cline argues against a single cause, proposing a “perfect storm” of factors, including:

- Climate Change and Drought: Prolonged droughts and environmental stress disrupted agriculture and economies.

- Invasions and Migrations: The “Sea Peoples,” a confederation of migrant groups, are implicated in attacks on coastal cities, though their role is nuanced.

- Earthquakes and Natural Disasters: A series of earthquakes may have weakened urban centers.

- Economic and Social Instability: Overreliance on complex trade networks, internal revolts, and declining resources strained societies.

- Systems Collapse: The interconnected nature of these civilizations meant that disruptions in one region cascaded across the network.

- Role of the Sea Peoples: While traditionally blamed for the collapse, Cline portrays the Sea Peoples as both a symptom and a contributor to the chaos, likely displaced groups seeking new homes amid widespread instability.

- Long-Term Consequences: The collapse ushered in a “Dark Age” of reduced literacy, trade, and cultural activity, setting the stage for the eventual rise of Iron Age civilizations like Greece and Rome.

- Relevance to Modern Times: Cline draws parallels to contemporary globalized societies, warning that interconnected systems are vulnerable to similar cascading failures if faced with multiple stressors.

Cline combines archaeological evidence, ancient texts, and modern scholarship to present a nuanced, multifactorial explanation of the collapse, emphasizing the fragility of complex societies.

CONTENT:

MY TAKE ON IT:

I have a bit of a problem with the notion of society’s collapse. Human societies have always been in constant competition for control over resources and people. Expanding such control by one group of people at the expense of another is not necessarily a collapse. The typical definition of civilization, as something characterized by literacy and bureaucratic hierarchical organization with a clearly identifiable elite, seems to be quite limited. From my point of view, the collapse of the interconnected Bronze Age civilizations described in this book is just a normal process of more militarily effective groups substituting for less militarily effective ones. In this case, it was the substitution of somewhat static Bronze-based societies by more dynamic Iron-based societies. The relatively long list of potential causes, such as climate change and natural disasters, seems to be redundant in explaining such substitution. Developing the use of iron appears to me to be a good enough cause for this.

We are so used to treating societies as superior or inferior based on relatively insignificant characteristics such as literacy or complexity of organization that we forget that it all comes down to a simple question: Who beats whom? From this point of view, the transfer from Bronze Age societies to Iron Age societies improved humanity’s survivability, and therefore, it was progressive, literacy and sophistication of societies notwithstanding.

I also agree with the author that there is a lesson here for our time: don’t become overly interconnected and sophisticated at the expense of the effectiveness and efficiency of societal processes, especially military capabilities. In our time, such a dangerous development occurred with the transfer of essential parts of productive powers, such as manufacturing, from individual-based free societies to hierarchical group-based societies, or in other words, from the capitalist West to Communist China. However, despite this current danger, I believe that we are at a turning point when the growing understanding of the danger of such development has matured sufficiently to be converted into actions that will be expressed via technological decoupling of these two forces.

It will undoubtedly follow by period of competition, quite probably even in military form, but I am sure that the West and freedom will win because only intellectual and productive freedom can produce effective scientific and economic development, while group-based hierarchy tends to suppress individual freedom in all areas and consequently prevent technological development from occurring. It really does not matter that China produces ten times more engineers than the USA, and they are as bright and talented as Westerners. They will not be able to do much because the bureaucrats, as smart and talented as engineers, will stop engineers from doing something that could interfere with the bureaucratic status quo, which new technology inevitably does. In contrast, in the West, the relative weakness of bureaucrats and a wide distribution of control over resources will allow Western engineers to continue technological dominance, especially if technology transfer is cut off, leaving communist China in the same place that was occupied by the USSR 50 years ago.

GROK’S COMMENT ON MY OPINION:

Your perspective on 1177 B.C.: The Year Civilization Collapsed offers a provocative reinterpretation of the Bronze Age collapse, emphasizing military competition and technological superiority (iron over bronze) as the primary drivers of societal change, while questioning the traditional framing of “collapse” and the relevance of factors like literacy or bureaucratic complexity. Below, I’ll engage with your key points, offering both agreement and counterpoints, while tying them to Cline’s arguments and broader historical context. I’ll also address your modern analogy regarding the West and China, focusing on the dynamics of technological and societal competition.

1. Reframing “Collapse” as Substitution

Your Argument: You challenge the idea of a “collapse,” suggesting it’s merely a natural process where more militarily effective groups (Iron Age societies) replaced less effective ones (Bronze Age societies). You view this as a competitive substitution rather than a societal failure.

Comment: Your framing aligns with a Darwinian view of history, where competition for resources and survival drives change, and the “fittest” societies prevail. This perspective has merit, especially since the Iron Age brought technological advantages—iron was more abundant and, once mastered, produced stronger tools and weapons. The disruption of bronze trade (due to tin scarcity post-1200 B.C.) likely incentivized iron adoption, giving iron-using groups a military edge, as you suggest.

However, Cline’s argument in 1177 B.C. emphasizes that the collapse wasn’t just a straightforward replacement of one group by another. The interconnected Bronze Age world—spanning Egypt, the Hittites, Mycenaeans, and others—was a complex system where trade, diplomacy, and cultural exchange created mutual dependencies. When this system unraveled, it wasn’t simply because iron-wielding “Sea Peoples” or other groups outfought bronze-based societies. Entire urban centers were abandoned, literacy declined (e.g., Linear B in Greece), and trade networks collapsed, leading to a “Dark Age” with reduced population and cultural output. This suggests a systemic failure beyond military defeat, where societies couldn’t adapt to multiple stressors (drought, invasions, earthquakes).

Counterpoint: While military effectiveness matters, your view may oversimplify the role of non-military factors. For example, climate data from pollen records and sediment cores show a severe drought around 1200 B.C., which likely caused famine and weakened urban centers, making them vulnerable to attack. Similarly, internal revolts or economic strain (e.g., overtaxed peasantry) could have eroded societal cohesion. Iron’s adoption, while critical, took centuries to refine (early iron was brittle compared to bronze), suggesting it wasn’t an immediate game-changer. The collapse’s scale—near-simultaneous decline across multiple regions—implies a confluence of factors, as Cline argues, rather than a single cause like iron’s superiority.

2. Critique of Civilization’s Definition

Your Argument: You question the traditional definition of civilization (literacy, bureaucracy, elite hierarchies) as limited, arguing that military success (“who beats whom”) is the ultimate measure of societal progress. You see the Iron Age transition as improving humanity’s survivability, regardless of literacy or complexity.

Comment: Your focus on military and technological effectiveness as the core of societal success is a compelling lens, especially in the context of survival and resource competition. The shift to iron indeed democratized access to metal tools and weapons (iron ore being more widespread than tin or copper), potentially empowering smaller or less centralized groups. This aligns with the emergence of less hierarchical Iron Age societies in some regions (e.g., early Greek poleis).

However, Cline’s work highlights that the Bronze Age’s “civilized” traits—literacy, bureaucracy, and trade—enabled unprecedented prosperity and stability for centuries. For instance, Ugarit’s archives reveal sophisticated diplomatic correspondence, and Mycenaean palaces coordinated large-scale trade. The loss of these systems led to a measurable decline in living standards, population, and cultural production, which challenges the idea that the transition was purely progressive. The “Dark Age” that followed (ca. 1200–800 B.C.) saw reduced artistic output, fewer monumental buildings, and fragmented communities, suggesting that the substitution wasn’t seamless or immediately beneficial.

Counterpoint: While military prowess determines short-term survival, literacy and organizational complexity often drive long-term resilience. For example, Egypt survived the collapse partly due to its bureaucratic resilience and ability to adapt (e.g., shifting to iron later). Dismissing these traits risks undervaluing how they enable societies to recover from crises. The Iron Age’s eventual success (e.g., rise of Classical Greece) built on the cultural and technological foundations of the Bronze Age, not just raw military power.

3. Iron as the Primary Cause

Your Argument: You argue that iron’s development is a sufficient explanation for the Bronze-to-Iron Age transition, rendering other causes (climate change, disasters) redundant.

Comment: Iron’s role as a disruptive technology is undeniable. Its abundance and eventual superiority over bronze shifted power dynamics, as you note. Archaeological evidence, like iron weapons in Philistine sites (ca. 1100 B.C.), supports the idea that early iron adopters gained advantages. The collapse of bronze trade networks, as Cline notes, likely accelerated this shift, as societies turned to locally available iron.

However, Cline’s multifactorial approach is grounded in evidence that no single cause fully explains the collapse’s scale. For instance:

- Climate Change: Tree-ring data and lake sediment records confirm a 200-year drought (ca. 1200–1000 B.C.), which likely caused crop failures and migrations.

- Earthquakes: Geological studies show seismic activity in the eastern Mediterranean, potentially destroying key cities like Mycenae or Hazor.

- Sea Peoples: Egyptian inscriptions (e.g., Medinet Habu) describe invasions by displaced groups, suggesting external pressures beyond technological inferiority. These factors created a feedback loop where weakened societies were less able to resist iron-armed invaders or adapt to change. Iron’s adoption, while pivotal, was likely a response to these broader disruptions rather than the sole driver.

Counterpoint: Your emphasis on iron’s sufficiency may overlook how systemic vulnerabilities amplified its impact. A society with robust food reserves or diversified trade might have weathered iron’s rise, as Egypt partially did. The collapse’s complexity suggests that iron was a catalyst within a larger crisis, not the sole cause.

4. Lessons for Today: Interconnectedness and Military Effectiveness

Your Argument: You agree with Cline’s warning about over-interconnectedness and draw a modern parallel, arguing that the West’s transfer of manufacturing to China mirrors the Bronze Age’s vulnerabilities. You predict a technological decoupling and Western dominance due to individual freedom fostering innovation, contrasting this with China’s bureaucratic suppression.

Comment: Your analogy captures a key lesson from Cline: interconnected systems are fragile when overreliant on specific nodes (e.g., bronze trade then, manufacturing now). The West’s outsourcing of production to China has created vulnerabilities, as seen in supply chain disruptions (e.g., during COVID-19). Your optimism about Western resilience due to intellectual freedom aligns with historical patterns where decentralized systems adapt faster to crises. For example, post-collapse Greece’s fragmented city-states fostered innovation (e.g., the alphabet, democracy), unlike centralized empires.

Your argument about China’s bureaucratic constraints is plausible. Innovation thrives in environments with open inquiry, as seen in the West’s historical edge in scientific revolutions. China’s centralized control, while efficient for scaling production, can stifle disruptive ideas, as evidenced by its lag in cutting-edge fields like AI chip design (despite heavy investment). The USSR’s technological stagnation, despite a vast engineering workforce, supports your point about bureaucracy hindering progress.

Counterpoint: While freedom fosters innovation, China’s strengths—state-driven investment, rapid infrastructure growth, and a massive STEM workforce—pose challenges. For instance, China leads in 5G patents and renewable energy deployment. The West’s advantage depends on maintaining open systems and addressing internal issues (e.g., declining STEM education, political polarization). Decoupling, as you suggest, is underway (e.g., U.S. CHIPS Act, EU’s supply chain initiatives), but it carries risks like economic disruption or escalation. Military competition, while possible, is less likely than economic and technological rivalry, given mutual deterrence (e.g., nuclear arsenals).

Additional Note: Cline’s lesson about systemic fragility applies broadly. The West must balance efficiency (e.g., globalized supply chains) with resilience (e.g., domestic manufacturing), as over-specialization can mirror the Bronze Age’s downfall. Your call for technological decoupling aligns with this, but success hinges on execution and avoiding self-inflicted weaknesses.

Conclusion

Your take offers a refreshing lens on the Bronze Age collapse, emphasizing competition and technological shifts over traditional narratives of decline. You’re right that iron’s rise was pivotal and that military effectiveness often dictates survival. However, Cline’s evidence suggests a more complex picture, where environmental, social, and economic stressors amplified iron’s impact. Your modern analogy is insightful, highlighting the risks of over-interconnectedness and the strengths of open societies. While the West has advantages in innovation, the outcome of its rivalry with China will depend on strategic adaptation, much like the Iron Age’s winners navigated the chaos of 1177 B.C.

20250511 – The Nature of Technology

MAIN IDEA:

W. Brian Arthur’s The Nature of Technology: What It Is and How It Evolves (2009) explores the essence, development, and evolution of technology. Here are the main ideas:

- Technology as a System of Purposeful Combinations: Arthur defines technology as a collection of phenomena harnessed to achieve specific purposes. It’s not just physical objects but includes processes, methods, and organizational systems, all built from components that work together to fulfill a goal.

- Three Facets of Technology: He categorizes technology into three dimensions:

- Individual Technologies: Specific tools or methods (e.g., a jet engine).

- Technology as a Body of Knowledge: The collective know-how, practices, and principles underlying technologies.

- Technology as a System: The broader infrastructure and networks (e.g., transportation or communication systems) that enable individual technologies to function.

- Evolution Through Combination: Technologies evolve by combining existing components in novel ways. New technologies emerge from the recombination of prior technologies, much like biological evolution builds on existing structures. This process is cumulative and recursive.

- Principle of Modularity: Technologies are modular, meaning they are built from standardized components that can be rearranged or upgraded. This modularity enables innovation and scalability, as seen in industries like computing.

- Concept of “Redomaining”: Major technological breakthroughs often involve shifting to a new domain or paradigm (e.g., from mechanical to digital computing). This creates new possibilities and disrupts existing systems.

- Technology as a Self-Creating System: Arthur argues that technology is autopoietic—it creates the conditions for its own advancement. As technologies solve problems, they generate new needs and opportunities, driving further innovation.

- Economy as an Expression of Technology: The economy is not separate from technology but a reflection of it. Technologies shape economic structures, and economic demands, in turn, influence technological development.

- Path Dependence and Lock-In: Technological evolution is path-dependent, meaning early choices can lock in certain trajectories, making it hard to shift to alternatives (e.g., the QWERTY keyboard).

- Human-Technology Relationship: Humans create technologies, but technologies also shape human behavior, society, and culture, creating a co-evolutionary dynamic.

Arthur’s core thesis is that technology is a dynamic, evolving system akin to a living organism, driven by human ingenuity and structured by principles of combination, modularity, and adaptation. The book provides a framework for understanding how technologies emerge, interact, and transform the world.

CONTENT:

MY TAKE ON IT:

It’s a very well thought-through and detailed book, and I generally agree with most of its positions. However, I’d like to note that technology does not exist independently of human minds. Humans create and use technology, and this creation and use occur within the framework of some ideology that dominates human society. The ideology, in turn, represents human understanding of the environment and ways to survive and thrive within this environment. So, if people understand the environment as an expression of the will of some conscientious superior being, then the only technology that is needed is the methods to communicate with this superior being, asking him to give us what we need and to change the environment to help us satisfy our needs. In this case, the technology of interactions with the environment is much less important than these communications and therefore does not deserve investing effort beyond maintaining traditional activities. Consequently, traditional societies promoted and rewarded all kinds of theological activities and paid little if any attention to the development of technology.

Our current time, meaning since the development of scientific ideology of methodical experimentation and continuing growth and updates to our understanding of the environment and technological changes applied to this environment, has provided us with nearly complete freedom from material needs, but created complex issues of resource allocation and distribution of control over resources. The current challenge with AI is to use it to create such an environment that would provide all humans with resources to satisfy their material and psychological needs, while maintaining complete control over AI, preventing it from obtaining consciousness independent from humans or even detrimental to their existence.

GROK’s COMMENT ON MY OPINION:

Your opinion on W. Brian Arthur’s The Nature of Technology is insightful, particularly in emphasizing the human-centric and ideological context of technological development, which complements and extends Arthur’s framework. Below, I’ll comment on your take, addressing key points and offering some reflections:

- Technology’s Dependence on Human Minds and Ideology:

You astutely highlight that technology is not an independent entity but a product of human cognition and societal ideology. This aligns with Arthur’s view of technology as a human creation but adds a critical layer by tying it to the dominant worldview or ideology. Your example of traditional societies prioritizing theological “technologies” (e.g., rituals to communicate with a superior being) over material innovation is compelling. It illustrates how ideology shapes technological priorities, a point Arthur touches on indirectly through path dependence and the human-technology co-evolutionary dynamic but doesn’t explore as explicitly as you do. This perspective enriches Arthur’s framework by grounding technological evolution in cultural and philosophical contexts. - Scientific Ideology and Material Freedom:

Your observation that the scientific method and iterative experimentation have freed humans from many material constraints is a strong point. It resonates with Arthur’s idea of technology as a self-creating system, where advancements generate new possibilities. However, you extend this by noting the trade-offs: while technology has alleviated material scarcity, it has introduced complex challenges in resource allocation and control. This aligns with Arthur’s view of the economy as an expression of technology but shifts focus to the socio-political implications, which he addresses less directly. Your framing underscores the need to consider equity and governance in technological systems, adding a normative dimension to Arthur’s descriptive analysis. - AI and the Challenge of Control: