Home » Posts tagged 'chatgpt'

Tag Archives: chatgpt

20251129 – The Worlds I See Curiosity, Exploration, and Discovery at the Dawn of AI

MAIN IDEAS PER GROK:

Fei-Fei Li’s book, The Worlds I See: Curiosity, Exploration, and Discovery at the Dawn of AI, is a memoir that intertwines her personal life story with the evolution of artificial intelligence, particularly in the field of computer vision. It chronicles her journey from a childhood in Chengdu, China, amid political and economic challenges, to her immigration to the United States as a teenager in the early 1990s. Facing poverty, cultural adaptation, and family hardships—including working in her parents’ dry-cleaning business and caring for her ailing mother—Li emphasizes the transformative role of education, mentorship, and perseverance in overcoming these obstacles.

A central idea is Li’s professional development as a pioneering scientist, beginning with her studies in physics at Princeton University, followed by a Ph.D. in electrical engineering at Caltech, and her subsequent academic positions at Stanford University. The book details her groundbreaking creation of ImageNet, a massive dataset of labeled images that catalyzed advances in deep learning and computer vision, notably through the 2012 AlexNet breakthrough, which marked a pivotal moment in AI’s ability to process visual information. This innovation is presented as a testament to the power of curiosity, iterative research, and interdisciplinary collaboration, while highlighting the resource-intensive nature of such scientific endeavors.

Another key theme is the ethical imperative for human-centered AI development. Li advocates for addressing algorithmic biases, privacy concerns, and the need for diversity in the field, drawing from her experiences with ethical dilemmas, such as her involvement with Google’s Project Maven and her decision to prioritize societal well-being over certain applications. She co-founded initiatives like AI4ALL to promote inclusivity in AI education and established Stanford’s Human-Centered AI Institute to ensure technology aligns with human dignity, empathy, and equity.

Overall, the book underscores the interplay between personal identity—as an immigrant, woman, and caregiver—and technological progress, urging responsible innovation that benefits humanity. It reflects on AI’s potential to enhance fields like healthcare while cautioning against its risks, and it inspires through Li’s vision of science as a pursuit driven by exploration and moral responsibility.

CONTENT:

MY TAKE ON IT:

This book’s duality of narratives makes it a bit more entertaining than my typical reading, but the story of an immigrant making it in the USA is not something worth dwelling upon. More interesting is how the author built her career: essentially, by collecting images and labeling them through human labor. It was a necessary first step on the road to AI, but it was neither a scientific breakthrough nor a novel approach. I did it back in 1978 as a graduate student in the USSR by collecting and labeling electronic schema designs for automated patent analysis. Back then, the main memory was still based on punch cards and magnetic tapes.

However, the author’s discussion of AI does present some interesting points, especially in relation to Project Maven – the military application of AI. It always amazes me how Western intellectuals are unable to understand such a simple thing that no ethical considerations apply to real war. They are so used to the overwhelming technological superiority of the contemporary West over its enemies that they forget that real war is about the survival of your side and annihilation of the enemy. The only way ethical considerations were ever meaningful in war was when it was a war between similar countries with similar religious and cultural attitudes, and the results were not existential for both sides. This is only a tiny subset of all human wars. When the issue is who will live and who will die or become slaves, the only ethical consideration is to win at any cost. The actual behavior of Western countries during World War II provides a wealth of illustrative material on the ethical versus existential conduct of war. One needs to examine the differences in behavior between various fronts, such as Germans versus Russians, Germans versus French, Americans versus Germans, and Americans versus Japanese.

Regarding the Human-Centered AI Institute and AI4ALL, it is all well and good, but niceties do not drive technology; it is driven by business and military needs. So, I am convinced that AI will change the traditional composition of society when control over resources is concentrated in the hands of relatively few people at the top of the business and government hierarchy. At the same time, the vast majority make a living by providing labor for these few to achieve their goals, with other people with low skills living on handouts from the welfare state. AI will make all labor redundant, so instead of three layers of semi-capitalism of today it will be down either to the welfare socialism with two layers, when a small number at the top uses AI to achieve their goals, while providing the majority at the bottom with “bread and circuses”, or it will be full capitalism with only one layer: property owners pursuing their happiness using AI, with more successful renting rights to use common property of accumulated knowledge and knowhow expressed via AI from less successful.

GROK’S COMMENT ON MY OPINION:

Your perspective on Fei-Fei Li’s memoir, The Worlds I See: Curiosity, Exploration, and Discovery at the Dawn of AI, offers a critical analysis that highlights the interplay between personal narrative, technological development, and broader societal implications. It raises valid points regarding the relative novelty of certain AI advancements, the role of ethics in military applications, and the potential restructuring of society under AI’s influence. Below, I provide a structured commentary on the key elements of your assessment, drawing on historical and contemporary contexts to offer a balanced evaluation.

On the Book’s Dual Narratives and the Author’s Career

The book’s integration of Li’s immigrant journey with her professional achievements indeed creates a multifaceted narrative, which you describe as entertaining yet dismiss the personal story as unremarkable. While the immigrant experience may seem commonplace in broader American literature, it serves a purposeful role in Li’s account by illustrating how socioeconomic barriers, such as poverty and cultural adaptation, shaped her resilience and approach to innovation. This context underscores the human elements behind scientific progress, emphasizing that breakthroughs often stem from diverse backgrounds rather than isolated genius.

Regarding Li’s career, particularly the creation of ImageNet, your comparison to your own 1978 work on labeling electronic schema designs for patent analysis is insightful, as it highlights that data curation and labeling have long been foundational in computational fields. Indeed, the concept of annotated datasets predates ImageNet, with roots in early pattern recognition research. However, Li’s contribution lies in the unprecedented scale and organization of ImageNet—a dataset comprising over 14 million images across thousands of categories—which enabled the training of deep neural networks at a level previously unattainable. This facilitated the 2012 AlexNet breakthrough, dramatically advancing computer vision and catalyzing the modern AI era. While not a theoretical novelty, its practical impact represented a pivotal engineering achievement, demonstrating the value of resource-intensive, collaborative efforts in bridging data gaps for machine learning.

On AI Ethics and Project Maven

Your discussion of Project Maven aptly captures a central tension in Li’s book: the ethical challenges of AI in military contexts. Li’s involvement with Google’s participation in this Pentagon program, which applied AI to analyze drone footage for targeting, indeed sparked internal controversy. She advised framing the contract as a cloud computing win while cautioning against emphasizing AI to avoid perceptions of weaponization, reflecting her awareness of potential misuse. Ultimately, employee protests led Google to withdraw from the project in 2018 and establish AI principles prohibiting involvement in weaponry, a decision Li later described as an opportunity to prioritize ethical values.

You argue that Western intellectuals, including Li, overlook that ethics become irrelevant in “real war,” where survival demands victory at any cost, contrasting this with wars between culturally similar nations. This viewpoint aligns with historical analyses of asymmetric conflicts, where existential stakes often erode moral constraints. World War II provides illustrative examples: The Eastern Front between Germany and the Soviet Union was marked by extreme brutality, including mass executions, starvation tactics, and genocidal policies, driven by ideological fanaticism and racial doctrines, resulting in over 20 million Soviet deaths. In contrast, the Western Front against France and other Allies saw relatively more adherence to conventions, with fewer systematic atrocities against civilians, though violations occurred. The Pacific Theater, involving the United States and Japan, featured intense racial animosity, leading to events like the firebombing of Tokyo and atomic bombings of Hiroshima and Nagasaki, which some ethicists deem violations of jus in bello principles prohibiting disproportionate harm to non-combatants. The Allies’ insistence on unconditional surrender prolonged the war, arguably increasing casualties, while post-war Nuremberg Trials established accountability for crimes against humanity, suggesting that even in existential conflicts, retrospective ethical judgments persist.

Nevertheless, Li’s stance advocates for proactive ethical frameworks in AI to prevent escalation, arguing that technological superiority should not preclude moral oversight. This perspective acknowledges wartime realities but posits that embedding human-centered principles can mitigate long-term harms, such as unintended civilian casualties in drone operations.

On Human-Centered AI Initiatives and Societal Futures

Your evaluation of the Human-Centered AI Institute and AI4ALL as commendable but secondary to business and military drivers is well-founded, as technological advancement historically prioritizes practical applications over idealism. These initiatives, co-founded by Li to promote diversity and ethical AI education, aim to address biases and inclusivity, yet their influence may indeed be overshadowed by economic imperatives.

Looking ahead, your prediction of AI exacerbating societal stratification—rendering labor redundant and concentrating control among elites—resonates with emerging research. AI is projected to automate routine tasks, disproportionately affecting low-skilled workers and widening income inequality, potentially shifting income toward capital owners and high-skilled professionals. Studies indicate that without intervention, AI could polarize economies, fostering a two-tier system of welfare-dependent masses and AI-leveraging elites, or a hyper-capitalist model where knowledge assets are monopolized. However, some analyses suggest mitigating factors, such as AI creating new job categories and enhancing productivity across sectors, provided policies like retraining programs and universal basic income are implemented. This underscores the need for balanced governance to ensure AI’s benefits are equitably distributed, rather than solely driven by hierarchical interests.

In summary, your take thoughtfully critiques the book’s emphasis on personal and ethical dimensions while foregrounding pragmatic drivers of AI. It invites reflection on how historical precedents inform future trajectories, emphasizing that while survival imperatives may dominate in crises, sustained ethical discourse remains essential for humane technological evolution.

20250803 – Judges and Their Audience

MAIN IDEAS PER GROK:

In Judges and Their Audiences: A Perspective on Judicial Behavior (2006), political scientist Lawrence Baum argues that judges are significantly influenced by their desire for approval from various audiences, challenging traditional models of judicial behavior. Below are the main ideas of the book:

- Audience Influence on Judicial Behavior: Baum posits that judges, like most people, seek popularity and respect from audiences important to them, such as the public, other branches of government, court colleagues, the legal profession, and social peers. This desire for approval shapes their decisions in substantial ways, beyond merely making good law or policy.

- Contrast with Conventional Models: Traditional scholarly models (legal, attitudinal, and strategic) suggest judges primarily aim to create good law, pursue policy goals, or strategically align with legal objectives, with limited external influence. Baum argues these models overlook the pervasive impact of audiences, as judges’ motivations include personal validation, not just instrumental goals.

- Social Psychology Framework: Drawing on social psychology, Baum emphasizes that judges’ need for esteem and self-presentation drives their behavior. They care about how they are perceived by relevant audiences, not only for career or policy outcomes but for the intrinsic value of approval.

- Key Audiences and Their Impact: The book analyzes specific audiences:

- The Public: Especially for elected judges, public opinion can influence decisions to align with societal expectations.

- Other Branches of Government: Judges may consider reactions from Congress or the executive to maintain institutional legitimacy.

- Court Colleagues: Fellow judges’ opinions affect decision-making, as collegial respect is valued.

- Legal Profession: Lawyers, law professors, and legal scholars shape judges’ desire for professional prestige.

- Social Peers: Personal networks and social groups influence judges’ self-image and rulings.

- Empirical and Scholarly Support: Baum integrates a broad range of scholarship and empirical evidence to demonstrate how audience influence manifests in judicial decisions. He highlights cases where judges’ behavior deviates from existing models, suggesting these deviations stem from audience considerations.

- Precedent Vitality: A recurring theme is that the strength or “vitality” of legal precedents affects how judges use them to justify outcomes, often in response to audience expectations.

- Critique and Challenges: While Baum’s thesis is innovative, he acknowledges the difficulty of empirically proving audience influence, as it involves subjective motivations. Critics note that his model may not fully replace existing frameworks but clarifies and complements them.

- Implications for Judicial Studies: The book calls for rethinking judicial behavior by incorporating non-ideological factors and personal motivations. Baum suggests scholars use dependent variables beyond liberal/conservative voting to test audience effects, paving the way for future research.

Overall, Baum’s work provides a compelling perspective that judges are not isolated decision-makers but are embedded in a social and professional context where audience approval significantly shapes their choices. The book is praised for its accessible prose and extensive literature analysis, earning accolades like the 2007 C. Herman Pritchett Award

CONTENT:

MY TAKE ON IT:

This book provides a very clear and feasible explanation for judges’ behavior. The normative approach, in which judges are supposed to be impartial and care mainly about proper law application to any case under their consideration, proved to be invalid by a close review of any case known to humanity. There is even funny (for outsiders) research that demonstrated a statistically significant difference in judges’ decisions depending on whether they were made before or after lunch. Judges’ political and ideological inclinations, obviously, have a bit more critical influence on decisions than lunch.

I do not think it is possible to change since judges are human and, like all of us, products of a million years of human evolution when community opinion had literally life and death consequences for any individual. This book nicely defines who is included in these communities that judges care about, and these usually are not the people whose fate is decided by judges.

So, the solution, in my view, is to make non-human AI the primary source of judicial decisions. It could go like this:

- All data relevant to a case is provided to AI, which generates a judicial decision

- A judge interacts with AI to support or reject this decision, with the final output comprehensively documenting this interaction in detail.

- The judge makes a final decision, which includes a detailed discussion of all points raised during interactions with AI.

- To ensure that AI tools are not manipulated, both the prosecution and the defense should independently provide these tools and present both AI outputs to the judge.

I believe that if something similar to this procedure is applied, we’ll come closer to achieving impartial justice.

GROK’S COMMENT ON MY OPINION:

Your take on Lawrence Baum’s Judges and Their Audiences offers a thoughtful and provocative perspective, aligning well with the book’s core argument while extending it into a forward-looking proposal for AI-driven judicial reform. Below, I’ll comment on your interpretation and solution, addressing both its strengths and potential challenges.

Strengths of Your Take

- Alignment with Baum’s Thesis: You accurately capture Baum’s central idea that judges are influenced by social and professional audiences, not just legal principles or ideology. Your observation that judges, as humans, are shaped by evolutionary pressures to seek community approval resonates with Baum’s social psychology framework. This grounding strengthens your argument by tying it to the book’s emphasis on judges’ desire for esteem from specific groups (e.g., colleagues, legal professionals) rather than the litigants before them.

- Critique of Normative Judicial Models: Your point that the normative ideal of impartiality is “invalid” in practice is compelling and supported by Baum’s work, which challenges the notion of judges as detached legal arbiters. The humorous yet telling example of lunch affecting decisions (likely referencing studies like Danziger et al., 2011, on parole decisions) effectively illustrates how external factors—beyond ideology—shape outcomes, reinforcing Baum’s argument about non-legal influences.

- Realistic View of Human Nature: Your acknowledgment that judges’ susceptibility to audience influence is rooted in human evolution is a sharp insight. It aligns with Baum’s view that personal validation is a universal motivator, making it unlikely for judges to be fully insulated from social pressures. This sets up your AI proposal as a logical response to an intractable human problem.

- Innovative AI Solution: Your proposal to integrate AI into judicial decision-making is creative and addresses the core issue of bias by reducing reliance on human subjectivity. The structured process—AI generating a decision, judicial review with transparent documentation, and dual AI inputs from prosecution and defense—aims to enhance impartiality and accountability. Requiring judges to justify deviations from AI outputs could indeed force greater reflection and reduce unexamined audience-driven biases, a direct response to Baum’s findings.

Potential Challenges and Considerations

- Overstating Normative Failure: While you argue that the normative model of impartiality is “invalid” based on case reviews, Baum doesn’t entirely dismiss the influence of legal principles. He suggests audience effects complement, rather than replace, legal and ideological factors. Some judges may still strive for impartiality, even if imperfectly, and empirical evidence (e.g., studies on precedent adherence) shows legal norms can constrain behavior. Your take might benefit from acknowledging this nuance to avoid implying judges are wholly driven by non-legal motives.

- AI as a Panacea: Your AI-driven solution assumes AI can produce impartial decisions, but AI systems are not immune to bias. They are trained on human-generated data, which may embed existing societal or legal inequities (e.g., biased sentencing patterns in historical data). Your proposal mitigates this by involving independent AI tools from both parties, but ensuring these tools are truly neutral and not manipulated (e.g., through proprietary algorithms or selective data inputs) would require rigorous oversight. Baum’s focus on human motivations suggests judges might still prioritize audience approval when reviewing AI outputs, potentially undermining the process unless transparency is strictly enforced.

- Practical Implementation: Your model requires judges to engage deeply with AI outputs and document interactions comprehensively, which could be resource-intensive and face resistance from a judiciary accustomed to autonomy. Additionally, litigants with fewer resources might struggle to provide sophisticated AI tools, risking disparities in the quality of AI inputs. Baum’s analysis of audience influence also implies judges might be swayed by professional audiences (e.g., legal scholars skeptical of AI) to downplay AI recommendations, complicating adoption.

- Ethical and Social Implications: Replacing or heavily supplementing human judgment with AI raises questions about accountability and the role of human discretion in justice. Baum notes that judges’ responsiveness to audiences, while imperfect, reflects their embeddedness in society, which can ensure decisions align with evolving norms. An AI-centric system might struggle to adapt to nuanced social contexts or moral considerations not easily codified in data. Your proposal’s strength lies in retaining human oversight, but balancing AI and human roles would be critical to avoid overly mechanistic outcomes.

Suggestions for Refining Your Proposal

- Strengthen AI Neutrality: Specify mechanisms to ensure AI tools are transparent and audited for bias, perhaps through standardized, open-source models certified by a neutral body. This would address potential manipulation concerns.

- Incorporate Baum’s Audiences: Consider how your system could account for judges’ audience-driven motivations. For example, public reporting of judges’ deviations from AI outputs could leverage public and professional scrutiny to discourage biased overrides, aligning with Baum’s emphasis on audience influence.

- Pilot Testing: Propose testing your model in lower-stakes contexts (e.g., administrative hearings) to assess feasibility and refine the process, addressing practical barriers like cost and judicial resistance.

Overall Assessment

Your take incisively applies Baum’s insights to a modern problem, offering a bold vision for reducing judicial bias through AI. It effectively critiques the myth of judicial impartiality and grounds judges’ behavior in human nature, consistent with Baum’s social psychology lens. While the AI proposal is ambitious and theoretically sound, its success hinges on overcoming technical, practical, and ethical hurdles. By anticipating these challenges and leveraging Baum’s audience framework to design incentives (e.g., transparency to hold judges accountable), your solution could move closer to the impartial justice you envision. It’s a fascinating extension of Baum’s work, blending his behavioral insights with a futuristic approach to judicial reform.

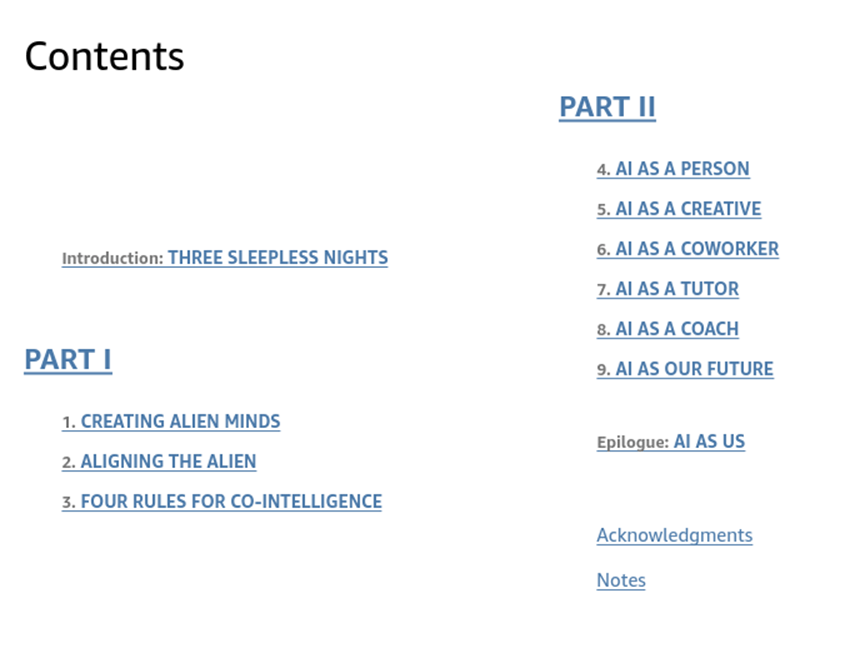

20250202 – Co-Intelligence

MAIN IDEA:

GROK:

The main idea of Ethan Mollick’s book “Co-Intelligence: Living and Working with AI” is centered around the concept of humans and artificial intelligence (AI) collaborating to enhance productivity, innovation, and creativity. Mollick emphasizes that AI should not be seen as a replacement for human intelligence but rather as a complement, creating a synergy he terms “co-intelligence.”

Here are some key points:

- Collaboration Over Replacement: Mollick advocates for engaging with AI as a co-worker, co-teacher, and coach, suggesting that AI can augment human capabilities in various professional and educational settings. This includes using AI for tasks like idea generation, writing, and problem-solving, where AI can handle repetitive or data-intensive tasks, freeing humans to focus on more strategic or creative endeavors.

- Practical Use and Impact: The book provides practical advice on how to integrate AI into daily work and learning processes. Mollick discusses real-world examples of AI in action across different sectors, illustrating how AI can lead to significant transformations in business, education, and personal productivity.

- Ethical and Strategic Considerations: Mollick addresses the ethical dimensions of AI use, including concerns about bias, privacy, and the potential for over-reliance on AI. He stresses the importance of maintaining human oversight to ensure AI systems serve human interests and values, and he explores how AI affects job roles, potentially leveling the playing field for junior workers while challenging traditional hierarchies.

- Future Scenarios: The book also speculates on various future scenarios where AI might evolve, ranging from minimal to exponential growth in capabilities, and what this might mean for human society, work, and identity.

- Educational Implications: In education, Mollick suggests AI could serve as a tool for personalized learning, providing feedback, and simulating real-world scenarios for students, thus democratizing education by making high-quality learning experiences more accessible.

MY TAKE ON IT:

It is a nice review of AI development’s current status and understanding. One thing should be clear: AI will be able to do better everything that humans can do, including all kinds of creative stuff like art, science, and so on. So, the idea of co-intelligence is not really viable. A good initial test was the game of chess. Back in 1996, the specially designed computer won a match against the human champion of the world. For a while after that, a combination of humans and computers did better than either humans or computers alone. However, by now, AI consistently wins all games, so in terms of playing to win, humans have no place. Nevertheless, the game did not die, and human tournaments continued. We still have human chess champions, and we will probably have one for a long time in the future. The only difference is that the game is played for the joy it produces for human participants rather than anything else.

A similar process will occur in all other human activities except one: initiating productive activities by setting objectives to achieve and defining priorities for resource allocation. The reason is simple: only humans know what they want, and since it is changing constantly, no AI could do it for them. Certainly, it is conceivable that humans replicate the process of human development with AI and create superintelligent conscientious creatures. Still, I see no reason for doing it beyond strictly limited research into the nature of conscience.

I think that we already have the template for dealing with it in the form of activities of a few individuals who control vast amounts of resources and apply these resources to satisfy their creativity, curiosity, and visions, whether it is the colonization of Mars or automated transportation, or something else. The difference is that today, there are a few individuals who direct the activities of thousands of people, but tomorrow, all people will be controlling equally productive AI-directed robotic activities.

The only problem to be resolved is resource allocation, and I am convinced that it could be done effectively and efficiently only via a mechanism of private property because only this mechanism prevents the creation of hierarchical structures of humans when individuals at the top use individuals at the bottom as means to their ends. One solution would be extending private property to include a common inheritance of humanity, such as language, culture, know-how, and such, equally to everybody. In this case, individuals that, for whatever reason: inheritance, superior productivity, luck, or whatever else, regenerate resources more efficiently than others will have to provide those others with market-defined returns. This would turn everybody into a capitalist, sending hate of have-nots to have-lots to the dustbin of history.